Insights are trapped in mountains of text. NLP sets them free.

In our previous post, we introduced IQVIA LLM Trustworthiness Toolkit (TWTK): a plug-and-play, model- and problem-agnostic module that adds several layers of trust on AI by enabling reliable output assessment, smart routing, and automation using trustworthiness scores for every Large Language Model (LLM) output.

Figure 1: With the TWTQ GenAI systems can automatically evaluate lack of consistency, a good indicator of hallucination, for key values and prioritize human evaluation for batch GenAI processes while powering Human-In-The-Loop chatbots.

The focus of this blog post will be on a key layer that TWTK offers out-of-the-box: the ability to generate a calibration report.

What is a calibration report, and why do I need one?

LLMs are, like any other machine learning (ML) model, probabilistic models that take some context as input and return predictions (or responses) as probabilities. This means that LLMs are never 100% certain—no ML model is—but hopefully they are certain enough to add value.

Ideally, we would be able to treat such probabilities as degrees of confidence, so that we can, for example, decide for ourselves to modify or reject a response from an LLM if confidence is too low. However, there is initially no guarantee that a probability returned by a model should reflect its confidence: the model can return a high confidence for a incorrect or partially correct response (see Figure 2).

Figure 2: An example where GPT 3.5 is very confident in a wrong answer: it should have answered A-T and leukaemia.

This is precisely where calibration becomes useful: calibration (of ML models) is defined as the degree of alignment between a model’s accuracy and its returned probabilities, as depicted in Figure 1. For example, if a model assigns a 75% average probability to a set of examples, we’d expect the model to be 75% accurate for that set. If the degree of alignment is low, we say that the model is uncalibrated, so we cannot immediately interpret probabilities as confidence scores, and calibration is due.

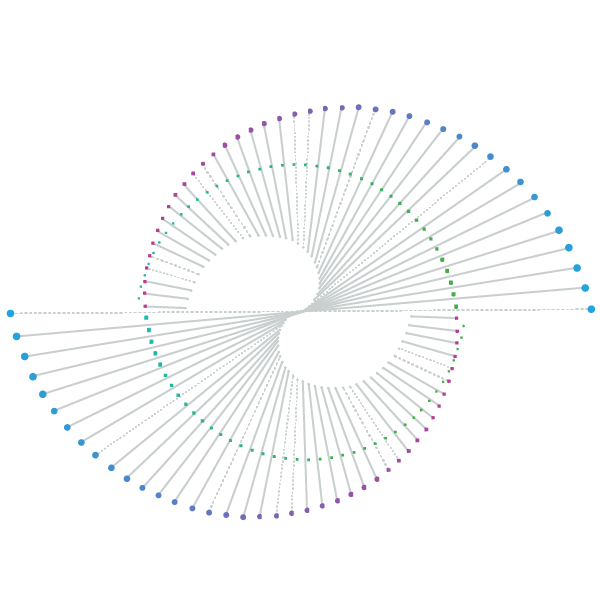

Figure 3: Two reliability diagrams illustrating what a calibrated model (left) and an uncalibrated model (right) look like: in a calibrated model, confidence (X axis) and accuracy (Y axis) are more aligned than in uncalibrated models.

Assessing model calibration is important in trustworthy AI for several reasons:

- Improving reliability: Confidence scores can help clinicians and patients assess the reliability of AI recommendations for consequential decisions, building trust in AI as a decision support tool.

- Improved regulatory alignment: Documenting LLM calibration can aid in creation of an audit trail to demonstrate to regulators the models' fairness, transparency, and robustness, supporting compliance goals and defensibility.

- Reliable decision support: Well-calibrated confidence scores can enable clinicians to effectively incorporate AI outputs into their decision-making, knowing when to rely on or discount the model's recommendations.

Why is calibration challenging with LLMs?

A well-established metric to assess calibration is Expected Calibration Error (ECE), which calculates the calibration of a model over a test set of some labelled data. ECE works by grouping model predictions into bins of probabilities (e.g. 0-10%, 10-20%, and so on), then computing the delta between average probability and average accuracy in each bin; the single and final ECE score is the average of bin-wise ECE scores. Figure 2 shows the typical reliability diagram plotted for ECE, which illustrates the distance between the actual calibration (the blue line) with the perfect calibration (the straight diagonal line).

Figure 4: Calibration metrics such as Expected Calibration Error (ECE) provide a single value for reliability, by binning responses by confidence, then computing the correlation between confidence and accuracy across bins.

ECE cannot be directly applied to LLMs (in most real-world scenarios) for 2 main reasons:

- Assigning probabilities to LLM responses is not trivial, given that LLMs are effectively next-word4 predictors, so the only probabilities we know for certain are the probabilities of each word in the response given the previous words (including the prompt), but not of the claim in the response.

- Computing accuracy is also not trivial, given that LLMs can—and are often required to—generate long-form responses. This means that strict equality between generated and ideal response is not a reliable method to compute accuracy, because responses can be partially correct and/or phrased differently from the ideal response.

TWTK tackles these challenges head-on, implementing cutting-edge strategies for both confidence elicitation and an easy-to-use calibration report generator (see Figure 3), designed to work in both black-box and white-box settings5. By addressing these non-trivial aspects, our toolkit enables more trustworthy and responsible use of LLMs across diverse applications.

Figure 5: TWTK's calibration report tool in action.

But confidence always goes hand in hand with accuracy, right?

To test the above hypothesis, we grabbed 2 popular LLMs (GPT 3.5 Turbo6 and MedLLaMA 13B7), a well-known benchmark in healthcare8, and applied TWTK to generate a calibration report. The goal was to verify whether a more performant model (i.e. the one with higher accuracy) is also better calibrated, i.e. whether higher accuracy necessarily means better calibration.

We applied 3 distinct confidence elicitation strategies that TWTK offers out of the box:

- Verbal: Consists of simply including a request in the prompt to return a confidence score in addition to the response.

- Self-consistency: Consists of sampling multiple responses9, then computing the degree of similarity between the several candidate responses and the original response. The final confidence score is the average of similarity scores.

- Hybrid: Consists of mixing both above strategies, where the verbal confidence score is the original score, then we penalise or boost the original score with the self-consistency scores.

|

Model |

Strategy |

NER |

RE |

SS |

DC |

QA |

Avg. |

|

GPT 3.5-Turbo 16K |

verbal |

0.45 |

0.50 |

0.12 |

0.30 |

0.13 |

0.30 |

|

self-consistency |

0.34 |

0.44 |

0.12 |

0.29 |

0.18 |

0.28 |

|

|

hybrid |

0.34 |

0.43 |

0.05* |

0.24 |

0.13 |

0.24 |

|

|

MedLLaMA 13B |

verbal |

0.57 |

0.70 |

0.27 |

0.70 |

0.27 |

0.50 |

|

self-consistency |

0.07* |

0.13* |

0.24 |

0.08* |

0.29 |

0.16* |

|

|

hybrid |

0.56 |

0.59 |

0.41 |

0.34 |

0.12* |

0.40 |

Table 1: Calibration errors for 2 LLMs—GPT 3.5 and MedLLaMA 13B—across 5 distinct biomedical tasks—named-entity recognition (NER), relation extraction (RC), sentence similarity (SS), document classification (DC) and question answering (QA). An open-source model that has been fine-tuned for the biomedical domain (MedLLaMA) has proven more reliable than a much larger, paywall-protected LLM (GPT 3.5), when confidence scores are derived from a sample of responses (self-consistency). * = overall best for each task.

|

Model |

NER |

RE |

SS |

DC |

QA |

Avg. |

|

GPT 3.5-Turbo 16K |

0.51 |

0.41 |

0.93 |

0.58 |

0.81 |

0.65 |

|

MedLLaMA 13B |

0.42 |

0.14 |

0.28 |

0.50 |

0.69 |

0.41 |

As show in Table 1, it turns out that that was not the case: GPT 3.5, while a more performant model (as shown in Table 2), did not return confidence scores that are more reliable than confidence scores coming from MedLLaMA 13B for the same tasks and confidence elicitation strategies.

References

- Lin, Stephanie, Jacob Hilton, and Owain Evans. ‘Teaching Models to Express Their Uncertainty in Words’. arXiv, 13 June 2022. http://arxiv.org/abs/2205.14334.

- Huang, Yukun, Yixin Liu, Raghuveer Thirukovalluru, Arman Cohan, and Bhuwan Dhingra. ‘Calibrating Long-Form Generations from Large Language Models’. arXiv, 9 February 2024. http://arxiv.org/abs/2402.06544.

- Loyola-Gonzalez, O. (2019). Black-box vs. white-box: Understanding their advantages and weaknesses from a practical point of view. IEEE access, 7, 154096-154113.

- Feng, H., Ronzano, F., LaFleur, J., Garber, M., de Oliveira, R., Rough, K., ... & Mack, C. (2024). Evaluation of Large Language Model Performance on the Biomedical Language Understanding and Reasoning Benchmark: Comparative Study. medRxiv, 2024-05.