Insights are trapped in mountains of text. NLP sets them free.

- Blogs

- Making GenAI Reliable: IQVIA LLM Trustworthiness Toolkit for Healthcare-grade AI™

We are excited to share in this blog post the release of our IQVIA LLM Trustworthiness Toolkit. This plug-and-play module enhances reliability in LLM applications, offering strategies for confidence, consistency, and transparency. Model, data, and domain agnostic, this toolkit enables more dependable, healthcare-grade AI™ across diverse applications.

The Challenges of Ensuring GenAI Reliability in Healthcare Applications

Large Language Models (LLMs) have emerged as powerful tools in natural language processing, and AI more generally, revolutionizing how we interact with machines, information, and knowledge. However, their impressive capabilities come with significant challenges. Indeed, as these models generate human-like text with remarkable fluency, they also introduce uncertainties that should be accounted for. From "hallucinating" convincing but false information to exhibiting inconsistent behavior across similar queries, LLMs often leave us questioning their reliability. Recent research has highlighted several concerning issues: LLMs like ChatGPT can mislead, producing text without concern for its accuracy, potentially leading to the spread of misinformation. The "Skeleton Key" jailbreak attack can cause LLMs to ignore safety guardrails and generate unsafe content. Stanford researchers found that GPT-3.5 and GPT-4 can still mislead to produce toxic, biased outputs and leak private data, despite some improvements.

These trustworthiness issues risk eroding user trust and limiting the impact that such technologies can achieve. But the trustworthiness of LLMs is particularly critical in healthcare, where the stakes are high and the margin for error is low.

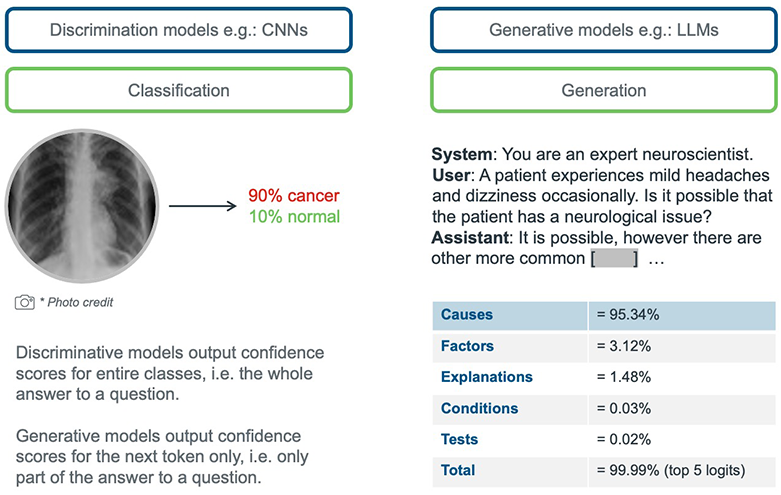

One approach to enhancing user trust in statistical models is augmenting model outputs with confidence scores. Indeed, this approach has been used for decades with discriminative models. However, while discriminative models provide scores for a fixed set of outcomes, LLMs are fundamentally different. Indeed, under the hood LLMs such as ChatGPT employ an iterative “decoding” step to generate text sequentially by selecting next tokens with the highest score at each step based on all the previous input and generated tokens. This dependence of the output of generated text on the previous text it generated in the same sequence makes it inherently difficult to obtain a single, meaningful confidence score for an entire generated response.

Confidence scores in LLMs are not straightforward

With that in mind, how can we harness the potential of LLMs while ensuring their outputs are dependable enough for healthcare applications?

Introducing the IQVIA LLM Trustworthiness Toolkit

IQVIA's NLP team is driven by the transformative potential of AI in healthcare. As a leader in this space, we've long recognized AI's capacity to revolutionize healthcare delivery and improve patient outcomes. As such – there are numerous examples of working hand in hand with our customers to bring patient impact from AI deployment. A recent and notable example in clinical decision support resulted in our receipt of the 2023 AI breakthrough award for best innovation in healthcare.

Providing data transformation for unstructured textto the life sciences industry for over 20 years

Our extensive work and direct involvement in these complex problems have led us to develop the IQVIA LLM Trustworthiness Toolkit, which tackles key technical barriers to realizing the full potential of LLMs in healthcare. Specifically, the following trustworthiness challenges are being addressed:

Calibrating confidence in complex scenarios

- The toolkit implements advanced confidence elicitation strategies to mitigate LLM overconfidence in ambiguous or novel medical situations.

- It provides robust methods to accurately detect and communicate model uncertainty, crucial for handling edge cases in healthcare applications.

Ensuring consistency across interactions

- By leveraging multiple sampling techniques, the toolkit addresses the issue of inconsistent responses to similar medical queries.

- It offers a comprehensive evaluation framework to measure and improve response consistency, enhancing reliability in healthcare settings.

Enhancing transparency and explainability

- The toolkit integrates innovative approaches to improve the traceability of LLM outputs, helping to clarify reasoning paths in medical recommendations.

- It incorporates cutting-edge techniques for post-hoc explanation and source attribution, balancing the need for explainability with maintaining model performance.

How IQVIA can help

At IQVIA, we're developing novel applications of our LLM Trustworthiness Toolkit to address healthcare-specific challenges, focusing on enhancing confidence in AI-generated outputs for important healthcare domain problems such as:

- Clinical Decision Support: Improving reliability of AI-assisted diagnoses and treatment recommendations by providing confidence scores that clinicians can interpret alongside LLM outputs.

- Medical Literature Analysis: Enhancing trustworthiness of AI-driven systematic reviews and meta-analyses by quantifying confidence in extracted information and synthesized conclusions.

- Drug Safety Monitoring: Increasing accuracy in identifying potential adverse drug reactions from diverse data sources by assigning confidence levels to AI-detected signals.

- Clinical Trial Design: Bolstering confidence in AI-suggested protocol designs by providing transparency into the model's reasoning and confidence in its recommendations.

- Medical Coding and Billing: Improving precision in automated coding processes by assigning confidence scores to AI-generated codes, facilitating human review of uncertain cases.

- Health Trend Prediction: Enhancing credibility of AI-forecasted public health trends by providing confidence intervals and highlighting areas of uncertainty.

Interested in applying this toolkit to your work? We'd love to hear from you. Contact us for more information or to discuss your specific use case.

References

- 2023 AI breakthrough award for best innovation in healthcare.

- Hicks, M.T., Humphries, J. & Slater, J. ChatGPT is bullshit. Ethics Inf Technol 26, 38 (2024).

- Mitigating Skeleton Key, a new type of generative AI jailbreak technique | Microsoft Security Blog.

- How Trustworthy Are Large Language Models Like GPT?

- Xiong, Miao, Zhiyuan Hu, Xinyang Lu, Yifei Li, Jie Fu, Junxian He, and Bryan Hooi. ‘Can LLMs Express Their Uncertainty? An Empirical Evaluation of Confidence Elicitation in LLMs’. arXiv, 17 March 2024.

- Tian, Katherine, Eric Mitchell, Allan Zhou, Archit Sharma, Rafael Rafailov, Huaxiu Yao, Chelsea Finn, and Christopher D. Manning. ‘Just Ask for Calibration: Strategies for Eliciting Calibrated Confidence Scores from Language Models Fine-Tuned with Human Feedback’. arXiv, 24 October 2023.

- Zhao, Theodore, Mu Wei, J. Samuel Preston, and Hoifung Poon. ‘Pareto Optimal Learning for Estimating Large Language Model Errors’. arXiv, 22 May 2024.

- Zhou, Han, Xingchen Wan, Lev Proleev, Diana Mincu, Jilin Chen, Katherine Heller, and Subhrajit Roy. ‘Batch Calibration: Rethinking Calibration for In-Context Learning and Prompt Engineering’. arXiv, 24 January 2024.