Secure your platforms and products to drive insights from AI and accelerate healthcare transformation.

As artificial intelligence (AI) continues to expand across various sectors, securing these systems against potential threats has become increasingly important. Our previous article, A Blueprint for Defensible AI Systems, highlighted the need for a quality management system for AI. The critical aspects of AI system design and mitigation strategies can address security threats both within and outside the system.

AI systems are susceptible to various attacks that can compromise their functionality, reliability, and trustworthiness. The NIST Adversarial Machine Learning report and guidelines from the OWASP Machine Learning Security Top Ten provide valuable insights into addressing AI vulnerabilities, particularly at the intersection of data protection and AI security. Effective AI security frameworks are essential to safeguard these systems against potential threats.

Data protection as a defense mechanism

Effective data protection strategies, such as anonymization, play an important role in mitigating AI threats. Anonymization techniques ensure that data cannot be traced back to individuals, reducing the risk of re-identification in the event of a security breach. This approach is particularly effective at limiting the scope of model inversion attacks , which attempt to extract sensitive information from the model, with regards to extracting details about data subjects.

As the OWASP Machine Learning Security Top Ten outlines, however, many other AI security threats can put data derived from people at risk. Ultimately, as “end users” of data, AI systems change the threat landscape by introducing more risks than if the end user were human. Unlike human recipients, AI systems can potentially infer other sensitive information from anonymized datasets beyond identities, and at a much larger scale. Extending defensive needs to include AI security threats is therefore becoming a critical feature of defensible AI.

Comprehensive strategies for AI security

To effectively protect AI systems, we need to implement a combination of strategies addressing the various classes of issues identified by NIST: availability breakdown, integrity violations, privacy breach, and abuse violations. These strategies can include continuous monitoring and ethical hacking practices that proactively test and secure AI systems against potential vulnerabilities. By considering these classes of issues and developing comprehensive solutions around them, organizations can better safeguard their AI systems and data.

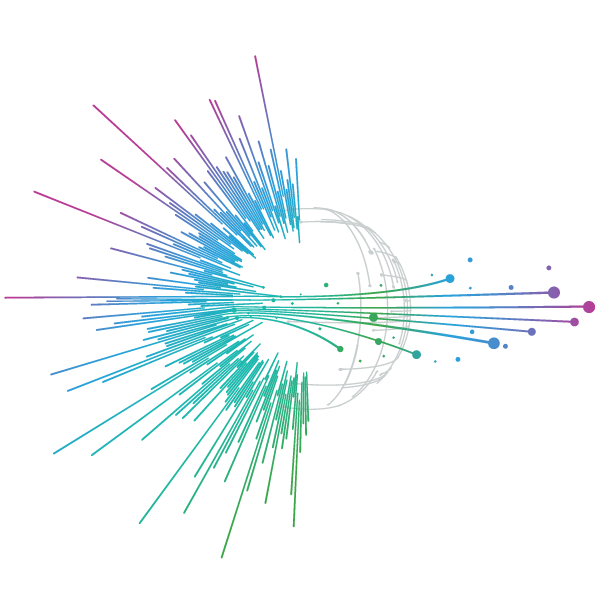

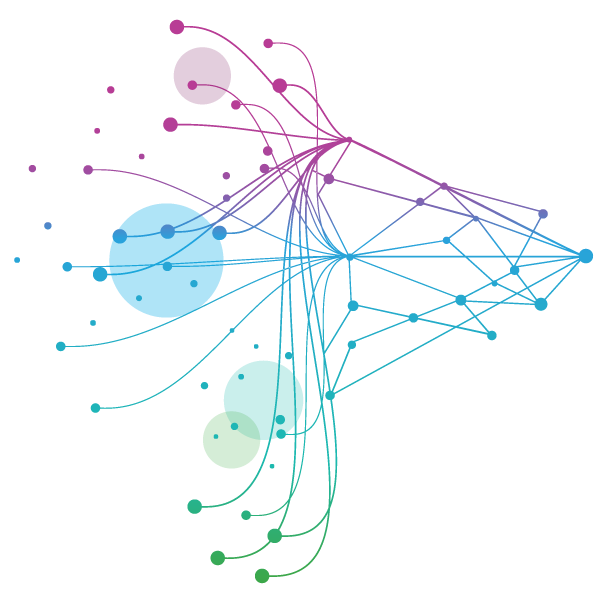

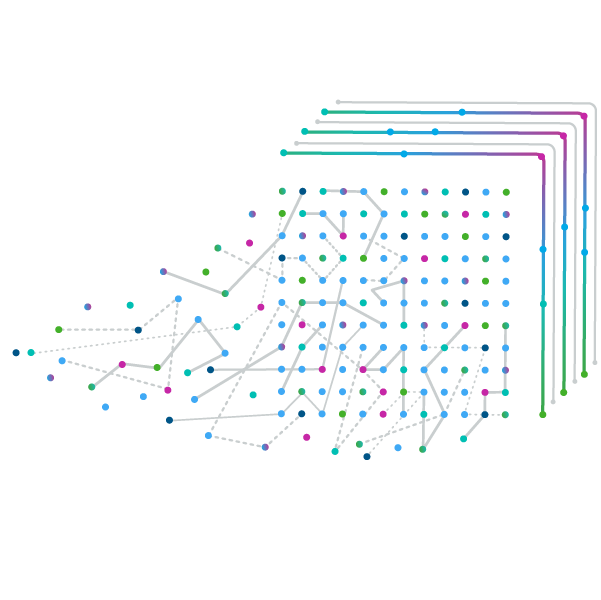

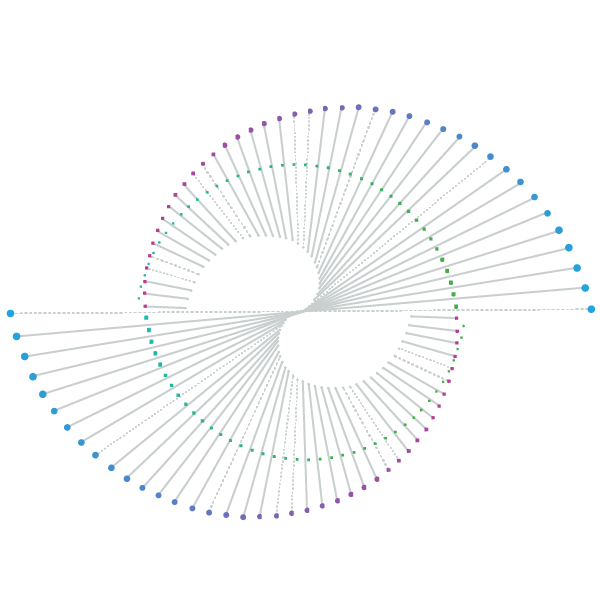

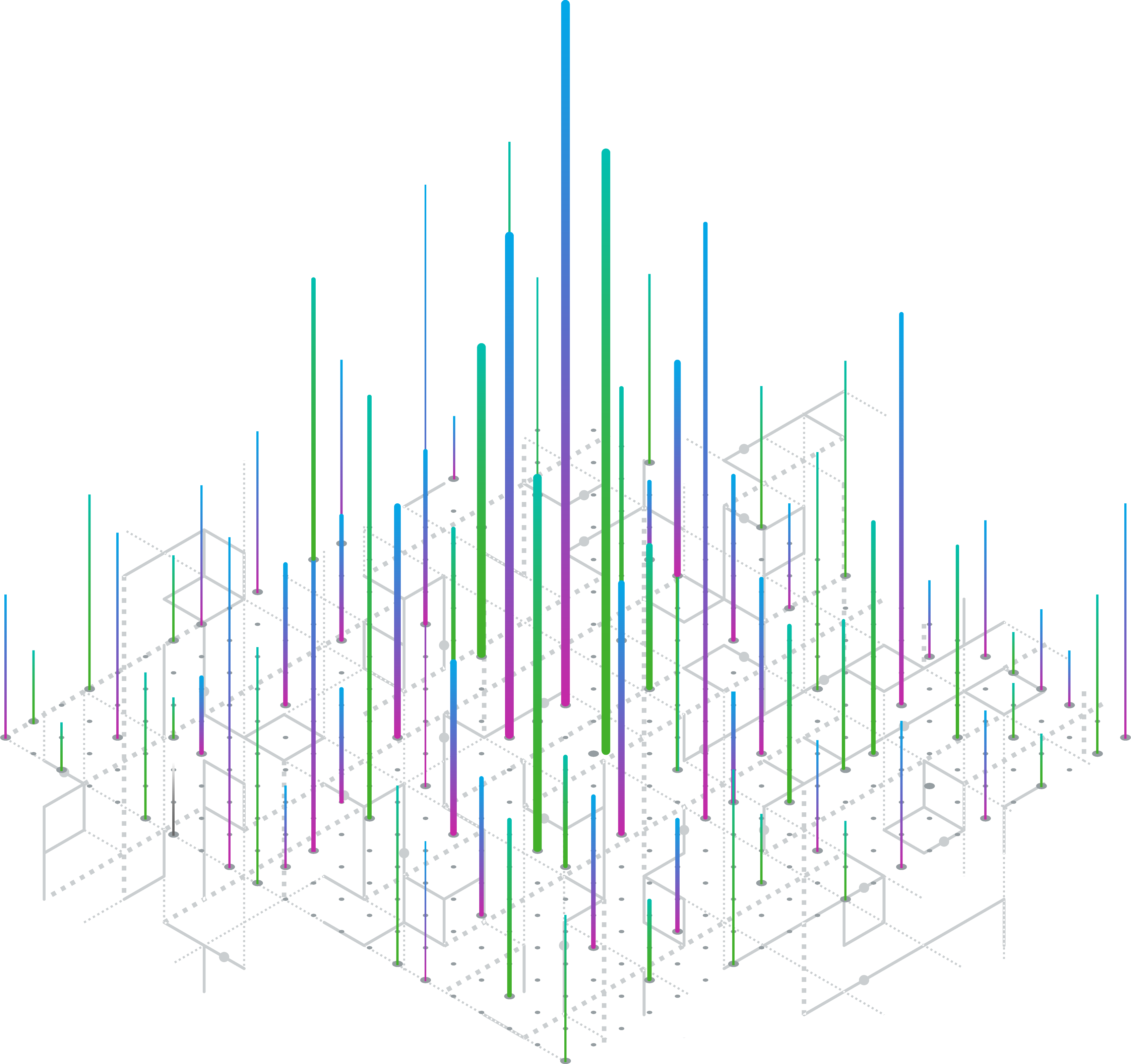

The NIST Adversarial Machine Learning report categorizes risks into four main areas, each requiring targeted mitigation strategies (examples of which are shown in the image below):

Availability breakdown: disrupting the performance of an AI model at deployment. These attacks can occur through:

- Data poisoning: controlling a fraction of the training set to corrupt the model.

- Model poisoning: manipulating the model parameters directly.

- Energy-latency attacks: using query access to degrade performance.

Integrity violations: targeting the accuracy of an AI model’s output, resulting in incorrect predictions. These can include:

- Evasion attacks: modifying test samples to create adversarial examples that mislead the model while appearing normal to humans.

- Poisoning attacks: similar to the previous, this time corrupting the model during training to induce incorrect outputs.

Privacy breach: extracting sensitive information from the training data or the model itself. Types of privacy attacks include:

- Data privacy attacks: inferring content or features of the training data.

- Membership inference attacks: determining if specific data was part of the training set.

- Model privacy attacks: extracting detailed information about the model.

Abuse violations: repurposing the system’s intended use for other objectives through indirect prompt injection. This can include:

- Fraud: exploiting the system for financial gain.

- Malware: spreading malicious software.

- Manipulation: influencing outcomes or behavior for malicious purposes.

Conclusions

As AI continues to evolve, the importance of structured frameworks and standards for security becomes increasingly critical. The ISO/IEC 42001 AI Management System and the NIST AI Risk Management Framework provide comprehensive guidance for ensuring responsible and defensible AI deployments. By integrating these standards into data governance practices and robust data infrastructure, organizations can enhance data protection, scalability, and decision-making accuracy.

The future of AI governance lies in continuous improvement, ethical considerations, and the seamless integration of AI and privacy solutions. Implementing an integrated approach to mitigating AI attacks will help secure AI systems and protect sensitive data in an ever-evolving threat landscape. Contact us when you want to learn more and see how our advisory or consulting services can help you drive trustworthy insights at scale.

Related solutions

Insights are trapped in mountains of text. NLP sets them free.

Extract, structure, normalize, de-identify and transform your disparate data sources into analytics-ready output.