-

Americas

-

Asia & Oceania

-

A-I

J-Z

EMEA Thought Leadership

Developing IQVIA’s positions on key trends in the pharma and life sciences industries, with a focus on EMEA.

Learn more -

Middle East & Africa

EMEA Thought Leadership

Developing IQVIA’s positions on key trends in the pharma and life sciences industries, with a focus on EMEA.

Learn more

Regions

-

Americas

-

Asia & Oceania

-

Europe

-

Middle East & Africa

-

Americas

-

Asia & Oceania

-

Europe

Europe

- Adriatic

- Belgium

- Bulgaria

- Czech Republic

- Deutschland

- España

- France

- Greece

- Hungary

- Ireland

- Israel

- Italia

EMEA Thought Leadership

Developing IQVIA’s positions on key trends in the pharma and life sciences industries, with a focus on EMEA.

Learn more -

Middle East & Africa

EMEA Thought Leadership

Developing IQVIA’s positions on key trends in the pharma and life sciences industries, with a focus on EMEA.

Learn more

SOLUTIONS

-

Research & Development

-

Real World Evidence

-

Commercialization

-

Safety & Regulatory Compliance

-

Technologies

LIFE SCIENCE SEGMENTS

HEALTHCARE SEGMENTS

- Information Partner Services

- Financial Institutions

- Global Health

- Government

- Patient Associations

- Payers

- Providers

THERAPEUTIC AREAS

- Cardiovascular

- Cell and Gene Therapy

- Central Nervous System

- GI & Hepatology

- Infectious Diseases and Vaccines

- Oncology & Hematology

- Pediatrics

- Rare Diseases

- View All

Impacting People's Lives

"We strive to help improve outcomes and create a healthier, more sustainable world for people everywhere.

LEARN MORE

Harness the power to transform clinical development

Reimagine clinical development by intelligently connecting data, technology, and analytics to optimize your trials. The result? Faster decision making and reduced risk so you can deliver life-changing therapies faster.

Research & Development OverviewResearch & Development Quick Links

Real World Evidence. Real Confidence. Real Results.

Generate and disseminate evidence that answers crucial clinical, regulatory and commercial questions, enabling you to drive smarter decisions and meet your stakeholder needs with confidence.

REAL WORLD EVIDENCE OVERVIEWReal World Evidence Quick Links

See markets more clearly. Opportunities more often.

Elevate commercial models with precision and speed using AI-driven analytics and technology that illuminate hidden insights in data.

COMMERCIALIZATION OVERVIEWCommercialization Quick Links

Service driven. Tech-enabled. Integrated compliance.

Orchestrate your success across the complete compliance lifecycle with best-in-class services and solutions for safety, regulatory, quality and medical information.

COMPLIANCE OVERVIEWSafety & Regulatory Compliance Quick Links

Intelligence that transforms life sciences end-to-end.

When your destination is a healthier world, making intelligent connections between data, technology, and services is your roadmap.

TECHNOLOGIES OVERVIEWTechnology Quick Links

CLINICAL PRODUCTS

COMMERCIAL PRODUCTS

COMPLIANCE, SAFETY, REG PRODUCTS

BLOGS, WHITE PAPERS & CASE STUDIES

Explore our library of insights, thought leadership, and the latest topics & trends in healthcare.

DISCOVER INSIGHTSTHE IQVIA INSTITUTE

An in-depth exploration of the global healthcare ecosystem with timely research, insightful analysis, and scientific expertise.

SEE LATEST REPORTSFEATURED INNOVATIONS

-

IQVIA Connected Intelligence™

-

IQVIA Healthcare-grade AI®

-

-

Human Data Science Cloud

-

IQVIA Innovation Hub

-

Decentralized Trials

-

Patient Experience Solutions with Apple devices

WHO WE ARE

- Our Story

- Our Impact

- Commitment to Global Health

- Code of Conduct

- Sustainability

- Privacy

- Executive Team

NEWS & RESOURCES

Unlock your potential to drive healthcare forward

By making intelligent connections between your needs, our capabilities, and the healthcare ecosystem, we can help you be more agile, accelerate results, and improve patient outcomes.

LEARN MORE

IQVIA AI is Healthcare-grade AI

Building on a rich history of developing AI for healthcare, IQVIA AI connects the right data, technology, and expertise to address the unique needs of healthcare. It's what we call Healthcare-grade AI.

LEARN MORE

Your healthcare data deserves more than just a cloud.

The IQVIA Human Data Science Cloud is our unique capability designed to enable healthcare-grade analytics, tools, and data management solutions to deliver fit-for-purpose global data at scale.

LEARN MORE

Innovations make an impact when bold ideas meet powerful partnerships

The IQVIA Innovation Hub connects start-ups with the extensive IQVIA network of assets, resources, clients, and partners. Together, we can help lead the future of healthcare with the extensive IQVIA network of assets, resources, clients, and partners.

LEARN MORE

Proven, faster DCT solutions

IQVIA Decentralized Trials deliver purpose-built clinical services and technologies that engage the right patients wherever they are. Our hybrid and fully virtual solutions have been used more than any others.

LEARN MORE

IQVIA Patient Experience Solutions with Apple devices

Empowering patients to personalize their healthcare and connecting them to caregivers has the potential to change the care delivery paradigm.

LEARN MOREIQVIA Careers

Featured Careers

Stay Connected

WE'RE HIRING

"At IQVIA your potential has no limits. We thrive on bold ideas and fearless innovation. Join us in reimagining what’s possible.

VIEW ROLES- Blogs

- An Integrated Approach to Securing AI

As artificial intelligence (AI) continues to expand across various sectors, securing these systems against potential threats has become increasingly important. Our previous article, A Blueprint for Defensible AI Systems, highlighted the need for a quality management system for AI. The critical aspects of AI system design and mitigation strategies can address security threats both within and outside the system.

AI systems are susceptible to various attacks that can compromise their functionality, reliability, and trustworthiness. The NIST Adversarial Machine Learning report and guidelines from the OWASP Machine Learning Security Top Ten provide valuable insights into addressing AI vulnerabilities, particularly at the intersection of data protection and AI security. Effective AI security frameworks are essential to safeguard these systems against potential threats.

Data protection as a defense mechanism

Effective data protection strategies, such as anonymization, play an important role in mitigating AI threats. Anonymization techniques ensure that data cannot be traced back to individuals, reducing the risk of re-identification in the event of a security breach. This approach is particularly effective at limiting the scope of model inversion attacks , which attempt to extract sensitive information from the model, with regards to extracting details about data subjects.

As the OWASP Machine Learning Security Top Ten outlines, however, many other AI security threats can put data derived from people at risk. Ultimately, as “end users” of data, AI systems change the threat landscape by introducing more risks than if the end user were human. Unlike human recipients, AI systems can potentially infer other sensitive information from anonymized datasets beyond identities, and at a much larger scale. Extending defensive needs to include AI security threats is therefore becoming a critical feature of defensible AI.

Comprehensive strategies for AI security

To effectively protect AI systems, we need to implement a combination of strategies addressing the various classes of issues identified by NIST: availability breakdown, integrity violations, privacy breach, and abuse violations. These strategies can include continuous monitoring and ethical hacking practices that proactively test and secure AI systems against potential vulnerabilities. By considering these classes of issues and developing comprehensive solutions around them, organizations can better safeguard their AI systems and data.

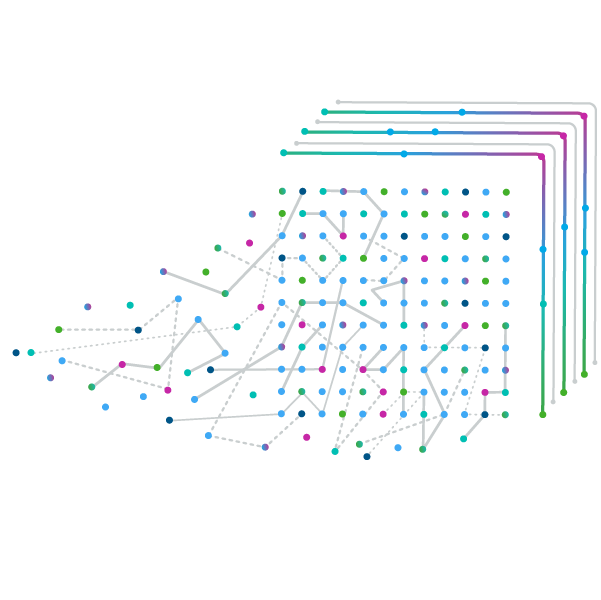

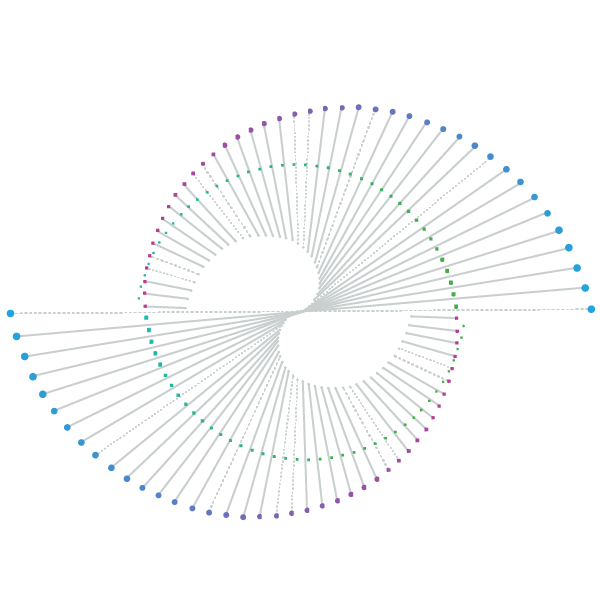

The NIST Adversarial Machine Learning report categorizes risks into four main areas, each requiring targeted mitigation strategies (examples of which are shown in the image below):

Availability breakdown: disrupting the performance of an AI model at deployment. These attacks can occur through:

- Data poisoning: controlling a fraction of the training set to corrupt the model.

- Model poisoning: manipulating the model parameters directly.

- Energy-latency attacks: using query access to degrade performance.

Integrity violations: targeting the accuracy of an AI model’s output, resulting in incorrect predictions. These can include:

- Evasion attacks: modifying test samples to create adversarial examples that mislead the model while appearing normal to humans.

- Poisoning attacks: similar to the previous, this time corrupting the model during training to induce incorrect outputs.

Privacy breach: extracting sensitive information from the training data or the model itself. Types of privacy attacks include:

- Data privacy attacks: inferring content or features of the training data.

- Membership inference attacks: determining if specific data was part of the training set.

- Model privacy attacks: extracting detailed information about the model.

Abuse violations: repurposing the system’s intended use for other objectives through indirect prompt injection. This can include:

- Fraud: exploiting the system for financial gain.

- Malware: spreading malicious software.

- Manipulation: influencing outcomes or behavior for malicious purposes.

Conclusions

As AI continues to evolve, the importance of structured frameworks and standards for security becomes increasingly critical. The ISO/IEC 42001 AI Management System and the NIST AI Risk Management Framework provide comprehensive guidance for ensuring responsible and defensible AI deployments. By integrating these standards into data governance practices and robust data infrastructure, organizations can enhance data protection, scalability, and decision-making accuracy.

The future of AI governance lies in continuous improvement, ethical considerations, and the seamless integration of AI and privacy solutions. Implementing an integrated approach to mitigating AI attacks will help secure AI systems and protect sensitive data in an ever-evolving threat landscape. Contact us when you want to learn more and see how our advisory or consulting services can help you drive trustworthy insights at scale.